Create An AWS S3 Website Using Terraform And Github Actions

We’ve talked a lot recently about infrastructure as code and setting up cloud environments. But nothing beats getting hands on with a technology to help learning. A workflow I’ve used a lot recently is Terraform (and remote state) using a Github Actions pipeline. It’s cheap, straight-forward and a great little workflow for creating cloud resources. Today, let me show you why.

So I thought setting up a basic workflow for creating a website would be a great hands-on way to get your head around some different topics: AWS, Terraform and Github Actions. Today we’ll go through how to setup an S3 bucket (which could function as a website) in AWS and use a Github Actions pipeline to create the infrastructure and upload our files. **By the end of this article you’ll know how to configure an AWS S3 bucket using Terraform and deploy it using Github Actions. **

The Project Outline

Before we get into the weeds, let me first take you through the outline of what we’re going to cover today.

-

** What is Terraform?**— Understand what Terraform brings to the table and why it’s my infrastructure-as-code tool of choice.

-

** What is Github Actions?**— A quick at what Github Actions is and why we’re using it today.

-

** Setup your AWS Account**— How to prep your AWS account ready for the tutorial.

-

** How to find your AWS access credentials**— Locate the access keys required to grant Github Actions permission to create your resources.

-

** Configure Your AWS Provider**— How to tell Terraform to work with your AWS account.

-

** Configure Terraform Remote State**— A pre-requisite for running Terraform on a remote server, we’ll talk about why that is.

-

** Write the Terraform for your S3 bucket**— We discuss how to represent an S3 bucket as a Terraform resource.

-

** Create a pipeline in Github Actions**— We discuss how to configure Github Actions to push your S3 code and run Terraform.

I know it seems like a lot — but I promise it’s not so bad, we’ll go through each piece in isolation to make your life easier. Once you’re up and running you can start to experiment and explore the different aspects of the setup to try and gain a more complete understand of what’s going on. ** Disclaimer:**In order to keep the tutorial as simple as we can we’ll cut some corners (i. e not explain some areas in as much thorough detail as I would like). But I’ll let you know when we’re cutting a corner and what you can do to improve the cut corner in future.

If that all sounds good — why don’t we get to it?

Preparation

Before we begin, setup a Github repo with the following structure…

1. What is Terraform?

Terraform Logo

Before we do jump in to the fine details let’s take a moment to clarify what Terraform actually does for us, and why we’ve chosen it for this project…

Terraform is an infrastructure as code tool. Infrastructure as code tools allow us to create infrastructure, such as databases, web servers using written code that is then converted into our required resources. In our case, we’re going to use it to create an S3 bucket. An S3 bucket is an easy way to store files in AWS and it can even act as a website.

So what are the reasons for choosing Terraform? To keep the justification simple, the main reason is that Terraform is platform agnostic. But what do I mean “platform agnostic”? I mean that you can use Terraform to provision any different type of cloud resource, from GCP to Stripe apps. That’s great for your learning experience as you only have one tool to use.

If you’re a bit shaky on the “why” behind infrastructure as code, be sure to check out: Infrastructure As Code: A Quick And Simple Explanation.

2. What is Github Actions?

GithubActionsBeta

The next question to answer is: What is Github Actions? And why have we chosen it? In short: Github Actions is Github’s answer to software pipelines. In essence Github Actions allows you to scripts when you do things on Github. You can upload files on push, or you can run scheduled events to do things like monitor running applications.

The amount of automation you can do with Github Actions is limitless, but in our instance we’re going to use it to run a file upload and for running our terraform execution when we push the code to our branch. Github Action is also super convenient if you’re already storing code in Github, and their free tier means that it’s also easy on the wallet, too.

So that’s us now up to speed with the tools: Terraform and Github Actions. Now it’s time to get into the main event, which is setting up our S3 bucket.

The first step? Ensure our AWS account is setup properly.

3. Setup Your AWS Account

The first step when working with a tool like Terraform is to setup the thing that will contain our resources. Terraform is just a tool, it needs something to act upon in order to work. You can use Terraform against AWS, GCP and a whole list of other resources.

In today’s case our “thing that will contain our resources” is AWS. So we’re going to need an AWS account. Head over to aws. amazon. com if you haven’t already got an account and get that setup.

As I said at the start there are a few corners we’re going to cut today — and proper AWS account setup is one of those corners. But if you’re curious about site setup, be sure to check out: Your personal AWS setup (and how to not get hacked) and also Where (And How) to Start Learning AWS as a Beginner if you want more AWS know-how.

4. How To Find Your AWS Access Credentials

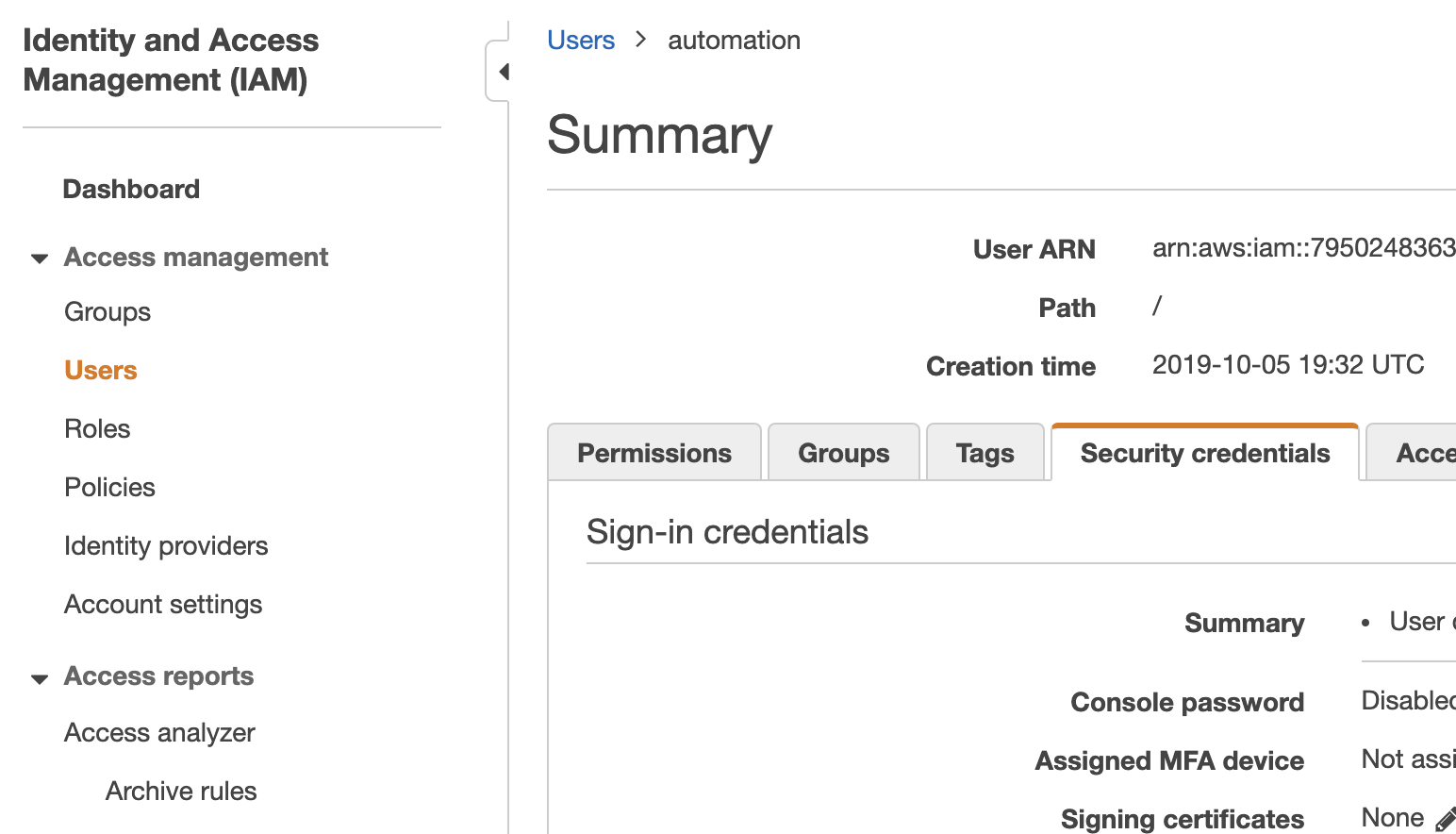

AWS IAM Credentials

Now that you’ve got your AWS account setup, we’ll need to find our credentials. Without credentials Terraform cannot access AWS to create our resources.

You can find your credentials by navigating to the AWS IAM service from within AWS and navigating to the “security credentials” tab. From here you can download your access key and secret key. You’ll need these credentials to give to Github Actions later so stick them somewhere safe for now. ** Note:**The account associated with your access key will need to have access to the (yet to be created) S3 bucket. One of the simplest ways to do this is by granting your user the user the AmazonS3FullAccess IAM Permission. But you should note that granting this permission will give your user full access to S3 — which is an unnecessarily broad permission. You could later tighten this policy up by only granting access to read/write/delete on your new S3 bucket.

For more details on AWS Access Keys, what they do, how they work and how best to work with them check out: AWS access keys — 5 Tips To Safely Use Them.

5. Configure Your AWS “Provider”

Awesome, now you should have an AWS account and access keys ready to go. What we want to do now is setup Terraform to reference our AWS account.

Go ahead and create a file (you can give it any name) in our case we’ve called itdemo.tf and add in the following code. This code block will tell Terraform that we want to provision AWS resources and that we’re defaulting the resource creation to theeu-central-1 region within AWS (feel free to change the region if it’s important to you).

6. Configure Terraform Remote State

Now we’ve got our provider setup we’ll also want to configure our remote state. So go ahead and add the following config to your previously created file ** Note:**we’ll discuss what replace theYOUR_REMOTE_STATE_BUCKET_NAME andYOUR_REMOTE_STATE_KEY tokens in just a moment.

Understanding Terraform Remote State

You might be wondering what’s going on with our remote state? What even is remote state and why do we need it? So let’s answer that question now: state is what Terraform uses to compare the current state (note the wording here) of your infrastructure against the desired state. You can either create this state locally (i. e Terraform writes to a file) or you can do it remotely.

We need to create our state remotely if we are to run it on Github Actions. Without remote state, Terraform generates a local file, but it wouldn’t commit it to GitHub, so we’d lose the state data and end up in a sticky situation. With remote state we avoid this problem by keeping state out of our pipeline in separate persistent storage.

Create A Remote State Bucket

But in order to use remote state we need a bucket. So go ahead and create a bucket in your AWS account for your remote state. You might want to call it something like “my-remote-bucket”. You can accept pretty much all the defaults for your bucket, but do ensure that it’s private as you don’t want to share it with the world.

Once you’ve got your bucket, substitute the bucket name into theYOUR_REMOTE_STATE_BUCKET_NAME and theYOUR_REMOTE_STATE_KEY with the name of your (soon to be created S3 bucket) i. emy_lovely_new_website. ** Note:**That the key in this context references the name of the state of the current provisioned resources, as a single backend state can hold data about many different terraform configurations.

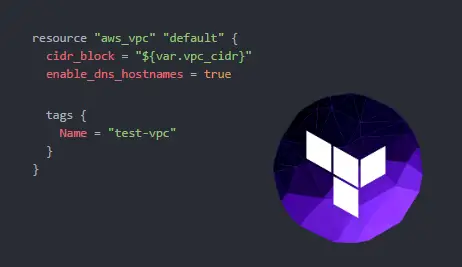

7. Write Your Terraform (The S3 Bucket)

Awesome — you’re almost there! We’ve got our Terraform setup and configured, all that’s left to do is to script our resource so that Terraform knows what we want to create. At this point it’s also worth noting that you can easily swap out the S3 bucket resource for any resource (or many resources) if you wanted so the setup is reusable.

Since today we’re creating an S3 resource, so let’s go ahead and do that. Add the following code resource block to your. tf file. Be sure to substitute[YOUR_BUCKET_NAME] with the actual bucket name that you want to use for your resource. The policy is added just to ensure the bucket is publically viewable (if you want to use it as a website).

8. Create Your Pipeline (Github Actions)

And we’re almost there. What you should have so far is everything you need. You could in fact run it locally at this point, but we ideally want to have your configuration running on a build tool (in this case: Github Actions).

You might be wondering why i’m so insistent that we run our code on Github Actions, so let me quickly explain the reasoning behind adding your Terraform to a pipeline:

-

** Pipelines Are More Visible**— If we’re working in a team, we can easily see what is running and when as opposed to running things locally.

-

** No Hung States**— If we’re in the middle of a deploy and our computer runs out of charge our Terraform can (literally) end with bad state.

-

** Traceability**— Pipelines usually store logs, which means we can review at a later point old builds and their output (if needed).

-

** Repeatability**— A pipeline when configured should do the same thing every time, which makes debugging easier.

-

** Simplicity**— A pipeline can be a substitute for your local machine, so you don’t need to setup local dependencies, which can be a lot simpler.

Hopefully you can start to see why I’m insistent on the pipeline for the infrastructure.

Adding AWS Credentials to Github

Before the pipeline will run though we need to go back to our AWS access credentials and add those to our repo. Navigate to github and then to your repository, select the option forsettings and thensecrets. On the security credentials screen you can add your AWS_SECRET_ACCESS_KEYand your AWS_ACCESS_KEY_IDto your repo.

In order to get our pipeline working, all you need to do is copy the following file and add it to your. github/workflows directory. And as before, don’t forget to substitute the[YOUR_BUCKET_NAME_HERE] token with your bucket name.

When you add this pipeline you’ll sync whatever is in your localsrc directory to your newly created S3 repo. So be sure to add that folder and include anindex.html file if you want to see anything useful after your S3 bucket is deployed.

Now when you next push your code, everything should complete easily. But, if you do happen to run into any issues with the setup be sure to double check the following commonly forgotten things:

-

Does your AWS user have access?

-

Are your credentials in AWS correct?

-

Have you added your access key and secret to Github?

-

Have you named everything consistently?

-

Do you have the correct discussed file structure (including all file extensions, etc)

Your Terraform Pipeline Is Complete!

And that’s really is everything. I appreciate we covered a lot of ground today, so thanks for sticking with it. You should now have a really cool setup that allows you to create Terraformed infrastructure in a repeatable and easy fashion. You can even take what we’ve done today and use it to provision GCP resources (if you fancy learning some GCP).

I hope that helped clear out come uncertainty on how to create a Terraform pipeline and how to use Github Actions for cheap simple automation. Just remember to take what we’ve built today and try to push the limits with it. The more you push a new technology the better you’ll understand it.

Speak soon Cloud Native friend!

Lou Bichard

Hey I'm Lou! I'm a Cloud Software Engineer. I created Open Up The Cloud to help people grow their careers in cloud. Find me on Twitter or LinkedIn.

See all posts →Latest posts by Lou Bichard (see all)

- 2024 Summary - A year of trips and professional work - January 9, 2025

- 2023 Summary - Data Driven Stories About The Cloud - December 31, 2023

- 2022 Summary - The Open Up The Cloud System - January 1, 2023